How to make meaningful AB Test interpretations

In May our Head of ASO, Simon Thillay, posted a blog on how to run a reliable Google Play experiment using the A/B/B test method. We began implementing this method while conducting tests for clients and in the process have learned a lot about testing but also faced a few challenges along the way. We saw some of the biggest challenges of the A/B/B testing method were pertaining to reliability, before facing the challenges of very little estimated uplift and results that favor both positive and negative outcomes. Here are some things we considered while interpreting our test results.

Setting Up Your Test

When you start to set up your test there are a few things to remember during the design phase. A great place to begin is investigating the current trends in your category and with your main competitors. A lot can be learned from your competition and the results from the tests they have completed. Next, decide which metadata element you would like to test. Then create a hypothesis on the element you are testing and why you think it will create and uplift in conversion. Lastly, make sure that you can isolate the changes made and it aligns with your hypothesis. If multiple elements are tested at the same time it will be unclear which actually caused a change in uplift. As a reminder, when following the A/B/B method it is easier to identify tests that are unreliable. By using the same variant for both variant B and C in the experiment set up, “If both B samples provide similar results, then these are likely true positive, whereas if they have different results, one of the two is likely a false positive.” Unfortunately, there isn’t a way to know which result is false.

Check out the full post on A/B/B testing with Google Play Expiraments

Check Your Reliability

There may still be situations where the level of similarity accepted as a reliable result varies slightly from test to test, since there is not an exact number where the line should be drawn between accepted or not. It comes down to best judgement and an understanding of the elements tested and the sample size. But, when the results are both considered similar it’s likely that they are a true positive and can be analyzed further for interpretation.

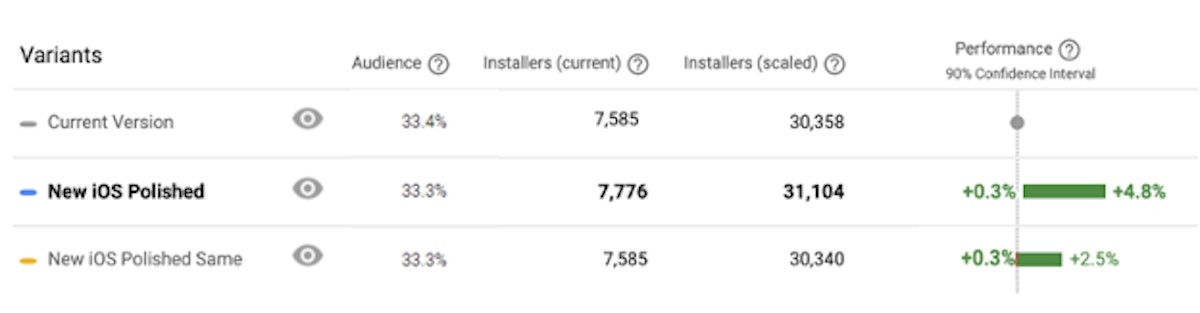

This result shows that the test results are reliable as they are almost identical in value.

Here we see that the results are very similar in value for Variant B and C, which were the same, and only vary by a few degrees in either direction. This test was considered reliable and from there we can begin to ask questions about what the results are really telling us.

Interpreting Results With a Small Impact

Once you have achieved a reliable A/B/B test it’s possible that the potential change in conversion is a low percentage in either direction. While percentages allow for comparison between the potential outcome of different elements tested it is important to consider the actual quantitative value of installs that are associated as well as the estimated percentage change. If you are conducting a test for a very powerful and popular app that already receives extremely high installs, you may think about the impact of the estimated percentage differently than say a newly released app that currently has a small user base with only a few hundred daily installs.

Even without knowing the quantitative values associated with a screenshot test in terms of sample size and installers we can explore different interpretations of what the results may indicate. But in order to explain how the interpretation process can vary we will use the same results for two different testing scenarios.

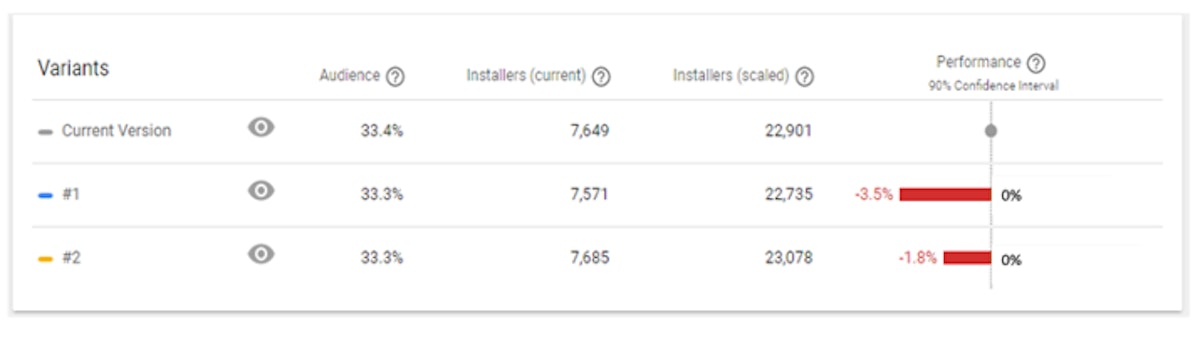

This result shows that applying the tested variant will likely result in a small uplift

Scenario 1:

A screenshot test is being conducted with an updated version (variant B and C) focusing on newly released app features and a modern screenshot design against the current version with outdated in app images and a basic layout.

In this scenario there are a few reasons that the results are not as impactful as expected. First being, the new features are not displayed in a way that the user can clearly identify their purpose or value. Second, the new screenshots are focused too much on what new features are available and strayed too far from the popular features users already love. And lastly, the new design simply doesn’t have as much of an impact in convincing new users as the developer had hoped.

In this scenario, we would suggest conducting a deep analysis on the differences and similarities between the two versions to find learnings on what may be performing lower than expected, whether its due to removing particular images and phrasing or adding it.

Scenario 2:

A screenshot test is being conducted with a slightly updated version (variant B and C) including 2 new in app images of new features but the overall design is the same as the current version. Results indicating only a small change in uplift may actually be the expected outcome. By making only minor changes overall it is likely to have less of an impact then a re-design of the screenshots.

Interpreting Negative Results

In any scenario, whether the results are highly or just slightly negative, it’s important to learn why. Following the test, a full analysis should be completed comparing the two tested versions.

This result indicates that applying the tested variant would likely result in a loss in conversion.

Things to consider include:

- Was there enough change between the current and tested variant?

- Is the value proposition still effectively displayed?

- Are new features/product updates special enough to include in the screenshots?

- Are the most popular features still effectively communicated?

- Did the updated design align with new trends?

Interpreting Both Positive and Negative Results

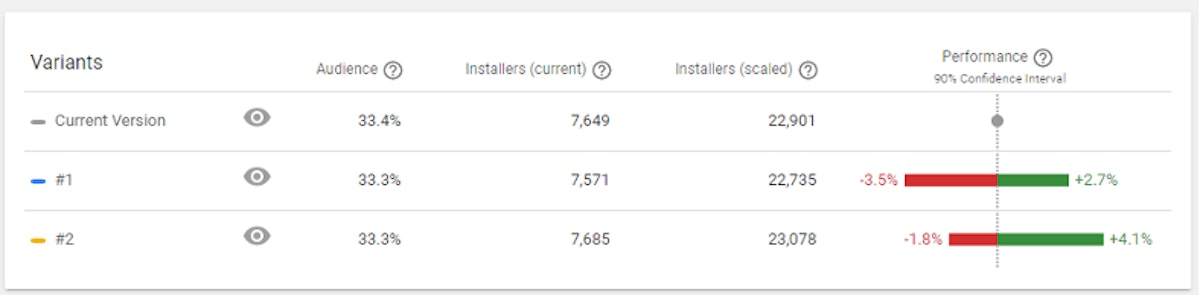

Below we see that the results indicate that applying the test version could result in either an improvement of decline in conversion of similar degree. So what exactly does that mean?

This result indicates that the tested variant could result in either an increase or decrease in uplift if applied.

It can be quite confusing to see test results that show both a loss and gain in uplift. When you receive results like this it can indicate a few things, some of which might also apply to other scenarios and results. If these results came from a screenshot test it could indicate that the change in screenshots wasn’t enough of a change for users to notice or that for this app screenshots do not play a large role in conversion. If these are the results from a test on the short description, it similarly could also mean that the short description doesn’t play as much of a role in conversion as you may have thought.

There are situations where you may want to apply the tested element even if results are inconclusive or negative. For example, if there is an update to the user interface or game play it is best to always show the most current version of the app/game.

While each A/B/B test will create different elements to research and analyze depending on what part of the store listing is being tested, we hope this provides some insight into what questions to consider following the different types of result outcomes. To analyze what your competitors are testing and the outcome of their test start a free trial with AppTweak.

Laurie Galazzo

Laurie Galazzo

Justin Duckers

Justin Duckers

![[INFOGRAPHIC] Apple’s Top Trending Searches of 2019](https://apptweak-blog.imgix.net/2023/03/infographic-top-trending-keywords-of-2019-2.png?auto=format%2Ccompress&w=716&h=358&fit=crop)

Carmen Longo

Carmen Longo