Improving A/B Tests Reliability on Google Play

This article was also presented during the ASO Conference Online on May 13th, 2020.

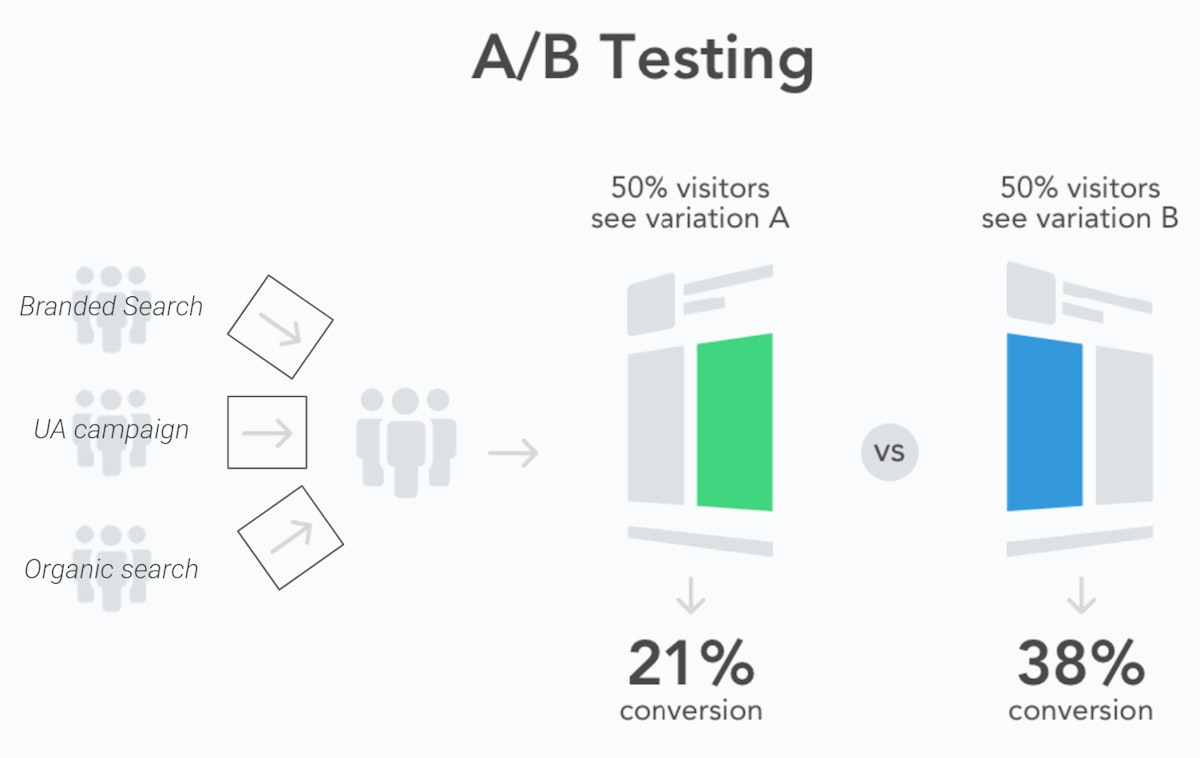

A/B testing has become a standard practice in mobile marketing with ASO being no exception to the rule. The principles are simple:

- divide your audience in various samples

- show them different variants (for instance, different sets of screenshots)

- measure how each variant performs to decide which one to use based on data rather than personal preference

The problem is, test results are only as reliable as your tests themselves. While Google Play has some major benefits such as running your tests in a native environment, many ASO practitioners have seen the tool predict conversion uplifts that never occurred once they had applied the winning variant to 100% of users. These results, called false positive results, can be explained by statistical flaws of Google Play. We believe they can be detected, in some cases, with our new testing method.

“Statistical noise” in Google Play A/B tests

One of the main causes of false positives on Google Play results is how test samples are constructed. The lack of data breakdown in Google Play Store Experiments results means that Google’s A/B testing tool may not distinguish between different types of users. This can create significant imbalances in the conversion rates of your test samples because users visiting your app’s store page have diverse intentions:

- Users searching for your brand are likely to download your app quickly due to their high awareness.

- Users from ad campaigns or generic searches may seek confirmation that the ad’s promise aligns with what they’ll get from your app, making them more sensitive to store listing elements.

Users are not filtered by their traffic source when conducting A/B tests with Google Play Store Experiments.

By not differentiating users before assigning them to different variants of your A/B test, Google Play Store Experiments are likely to end in different test samples being composed quite differently and therefore result in significant differences in conversion that have nothing to do with your different variants.

Another concern is the impact of seasonality on store dynamics, causing fluctuations in your test samples’ performance day by day. While it’s beyond your control, you can consider these parameters when conducting A/B tests:

- Run your tests for a minimum of 7 days to reduce the impact of weekly patterns. While Google recommends this practice, their platform often provides A/B test results within a few days. However, many developers have noticed that extending the test for a few more days can sometimes alter the outcomes significantly, even leading to a reversal of the test winners.

- Don’t A/B test creatives for a seasonal event ahead of time, as users are not yet preparing for that event. Either test at the beginning of your event, or simply apply your new creatives immediately and do a pre/post conversion analysis.

Learn more about app seasonality trends with our case study on the holiday season

Differences between statistical significance & statistical power

Understanding Google Play Store Experiments and A/B testing is challenging due to the statistical model behind them. An A/B test needs to determine significant differences between variant A and variant B results. It should also indicate the likelihood of correctly detecting real differences (avoiding false negatives) and incorrectly concluding there are differences when there are none (avoiding false positives).

Google Play Store Experiments all rely on a statistical model called the 90% confidence interval, which has already two major drawbacks:

- The significance level is below the standard of 95% confidence interval used in most A/B tests

- Play Store Experiments do not provide any clear data on how reliable different tests are. This might make you believe all tests have the same likeliness to lead to the predicted results, when, in fact, some tests are more reliable than others.

The statistical power of an experiment depends on the sample size. Larger samples lower the risk of false positives and increase the likelihood of detecting small improvements in conversion.

Running A/B/B tests to flag false positive results

Having isolated likely suspects for why Google Play Experiments gave us false positive results more often than not, our team tried to think about how we could limit the risks of encountering them, but also improve our chances to know from the test results already how likely it was that our results were false positive ones.

Our conclusions were:

- It is crucial to keep all tests running for at least 7 days to limit potential misguided results for weekly seasonality. It is important to ensure good enough sample sizes that would increase the statistical power of all tests.

- ASO practitioners should not shy away from running tests while their marketing team runs UA campaigns. But they need to ensure that the traffic split between each marketing channel remains stable during the entire test.

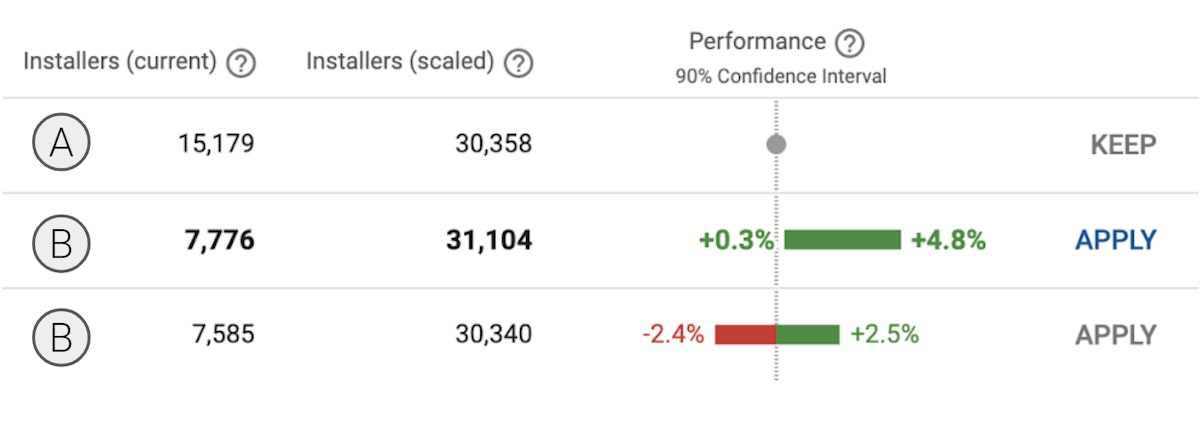

- Creating two of the same B variants in the test (effectively designing an A/B/B test rather than A/B) will help assess if results are likely to be true or false positive. If both B samples provide similar results, these are likely true positive. Whereas, if they have different results, one of the two is likely a false positive.

- A consequence of this structure is that samples should have respectively 33.34%, 33.33% and 33.33% of traffic, and no other (C) variant should be added to the test.

With very different results between the first and second B samples, A/B/B tests can flag suspicious results where at least one of the two results is a false positive.

The key advantage of this new protocol is that it assesses test reliability within a week. In contrast, a popular method involves conducting an A/B test in the first week and then a B/A test in the second week to confirm the initial results. Both approaches are effective in preventing false positives. But the A/B/B test structure reduces the time needed for action and ensures that the “confirmation” sample is tested simultaneously, avoiding potential seasonality biases.

Assessing the impact of A/B/B test method

Having educated ourselves about statistics, our next goal regarding A / B / B test will be to try and assess the real impact they can have for ASO practitioners, which is why we are calling all willing readers to try this method with their apps and share some results with AppTweak, so we can hopefully share with you in a later article the true impact of A / B / B tests and especially how often they have detected false positive results or how often they were not enough to detect them.

The information we would like to get from you for each test includes:

- App name (optional)

- App category

- Store listing of the test (Global / en-us default / en-uk / es-spain / …)

- Start & end date

- What variant type did you test?

- Applied traffic split

- Installers per variant

- Lower limit of the confidence interval

- Upper limit of the confidence interval

- For applied variants, conversion uplift between the week after your test and the week before

You can find a template for sharing your test results here. This data will be kept confidential by AppTweak and only used for aggregating statistics regarding the A/B/B test method.

If you are willing to share some test results or have questions regarding the A/B/B method, we also invite you to contact our team on our website, via email, social media, or on the ASO Stack slack channel.

Oriane Ineza

Oriane Ineza

Alexandra De Clerck

Alexandra De Clerck