A Guide to Google Play Store Listing Experiments

Boosting your app’s visibility through metadata optimization, an increased category ranking, or being featured is great, but this is only one component of ASO. If you are not converting those app impressions into downloads, you are limiting your app’s potential. That’s where Google Play’s store listing experiments can be a great resource for ASO practitioners.

Google Play store listing experiments allow you to run A/B tests to determine – with data – the creative or metadata elements that should be incorporated on your app store listing page to help increase conversions.

While many of us may be familiar with the tool, it still is quite new for some. Google’s new store listing features enhance A/B test customization for ASO. This blog covers best practices and the new Google Play updates.

Benefits of Google Play Store listing experiments

Before jumping into the specificities, understand the benefits of incorporating store listing experiments into your ASO strategy on Google Play.

Run A/B tests on Google Play to gain insights into which creative and metadata elements resonate with users. A/B testing on Google Play allows you to:

- Identify the best metadata and creative elements that should be incorporated on your store listing page.

- Increase your app’s installs, conversion rate, and retention rate thanks to the insights gained through A/B testing.

- Learn what resonates (or not) with your target market, based on their language and locality.

- Identify seasonality trends and takeaways that can be applied to your store listing page.

What you can test with Google Play Store listing experiments?

Next, let’s review the creative and metadata elements that can be tested through Google Play experiments. The following app store elements can be A/B tested, in up to five languages.

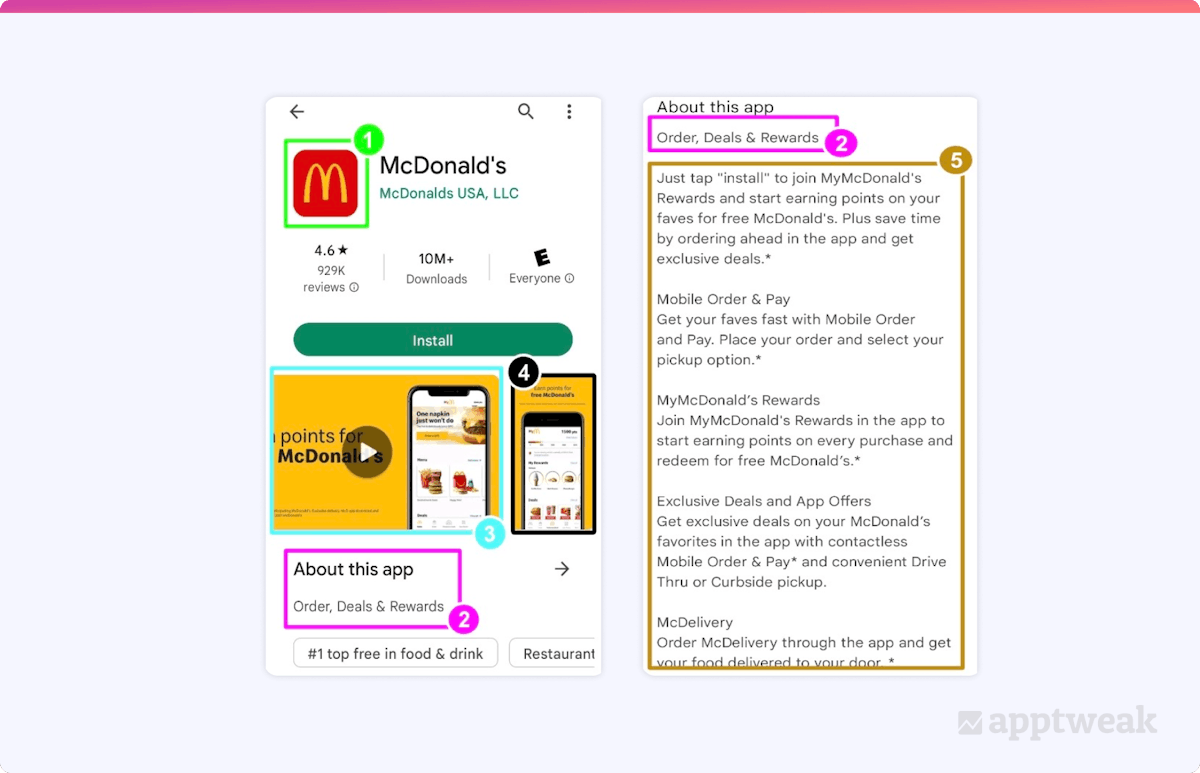

- Icon: Your app’s icon is the first creative element visible to users. First impressions on Google Play are crucial, as the icon may be the sole visible element in search results and can significantly affect conversion rates.

- Short description: The short description, up to 80 characters, follows your app’s title and is crucial for keyword indexing. It appears beneath the video and screenshots on your store listing.

- Feature graphic & preview video: Your app’s preview video is a short video clip that highlights your app’s main value propositions. The feature graphic overlays the preview video and appears before your app screenshots, taking up most of the device view. It is therefore a major asset for conversion.

- Screenshots: Your screenshots showcase your app’s key benefits and preview the user experience.

- Long description: Has a 4,000-character limit and is important for keyword indexation. Few users read the long description, yet it can significantly impact conversions.

Screenshot of the store listing page for McDonald’s (Google Play, US).

Screenshot of the store listing page for McDonald’s (Google Play, US).

Discover the latest changes and updates on Google Play

Best practices for Store Listing experiments

Now that you understand Google Play store listing experiments, it’s time for the fun part: running one! Before you do, make sure to consider these best practices for your A/B testing strategy on Google Play.

1. Target the right people

When setting up a test, you’ll have the opportunity to select up to 5 different languages. A common mistake is assuming “EN-US” limits your test to US users.

If your app is only available in the United States, this will be the case. Otherwise, your test could also become visible to users in other English-speaking countries.

To conduct a test targeting a specific country, you need to create a custom store listing and carry out an A/B test on that listing. Make sure to select the language or territory that makes the most sense for your A/B test.

We’d also recommend you target the largest audience possible. This will ensure your test results are as accurate as possible and provide quick results.

Read this guide to App Store Optimization on Google Play

2. Have a clear hypothesis

A clear hypothesis will let you easily determine whether your A/B test was successful and if your winning variant should be implemented. For example, a clear hypothesis could state that highlighting value proposition A more than value proposition B in your app’s first screenshot will lead to an increase in your overall conversion rate.

3. Test one element at a time

This goes hand in hand with having a clear hypothesis: you should test only one creative or metadata element at a time. The more elements you test at once, the less sure you can be when attributing the test result to a specific change.

4. Ensure your test results are accurate

Ascertain the accuracy of your test results to make sure the changes you incorporate had a positive impact on your conversion rate. In particular, Google has incorporated new tools that will help to lower the risk of false positives in your A/B test (more on this later). Bigger sample sizes are required for more accurate A/B tests. This is not always possible for newer or smaller apps. One workaround is to run an A/B/B test, whereby creating two of the same B variants will help you better assess whether the results are likely to be true or false.

5. Iterate your tests

Implement learnings from your previous test in new ones to improve your testing strategy even further. There is no such thing as bad data. Even if the variants you are testing are not successful, you can still analyze the data and take away learnings for the future.

6. Run your test for at least one week

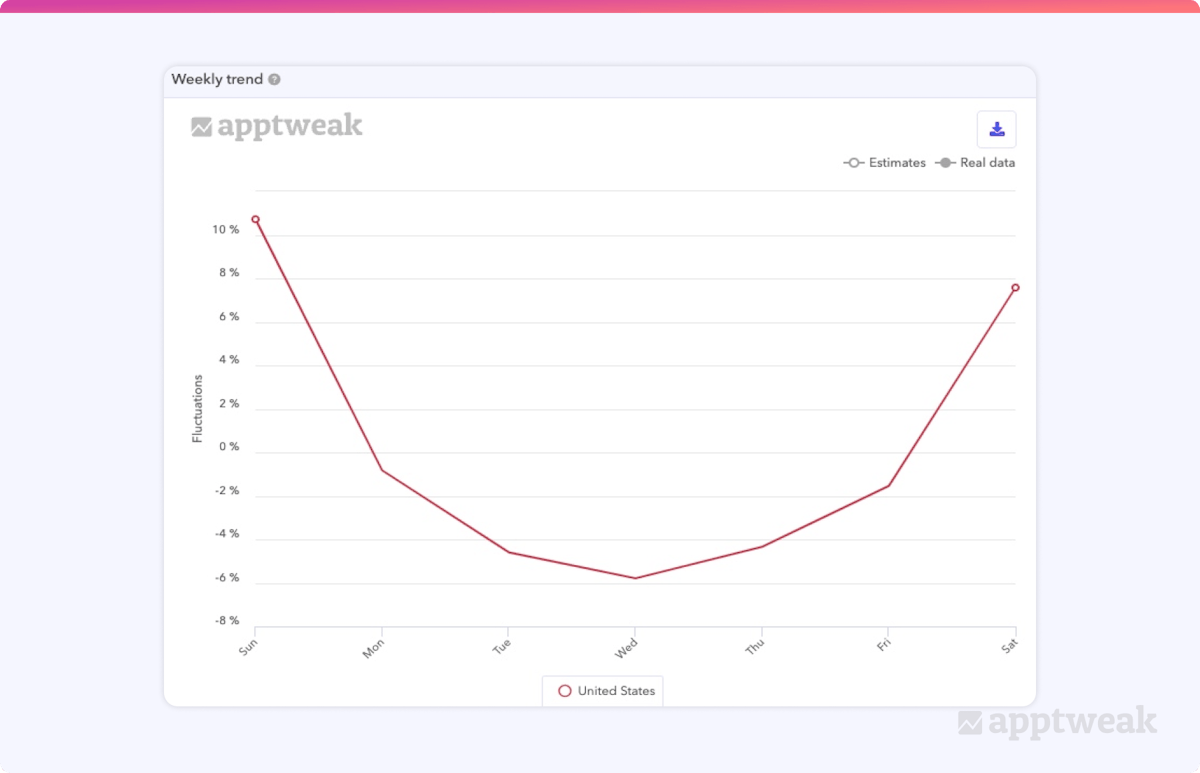

This will ensure you take into account your app’s weekly seasonality effects. The following image shows the weekly seasonality trend for games in the US, which generally receive more downloads at the weekend when more users have free time. Not taking into account weekly seasonality trends might decrease the accuracy of your sample and impact the results of your A/B test.

Average weekly download fluctuations for Games (free) in the United States, Google Play.

Average weekly download fluctuations for Games (free) in the United States, Google Play.

New features in Google Play Store listing experiments (2022)

New features implemented by Google for store listing experiments give developers and ASO practitioners more control over their A/B tests and, statistically, more reliable results when running A/B tests.

To ensure you make accurate decisions based on the results of your A/B tests, you need to decrease the probability of errors and false positives while increasing the likelihood of your results being true positives. As ASO practitioners, you want to ensure that the difference between the control and winning variant occurred because of the change you implemented, and not by chance. Therefore, it is important to ensure that the statistical analysis and probability calculations you are leveraging are as accurate as possible because the A/B testing methodology relies on both these components.

Let’s dive right into the new features of Google Play store listing experiments:

Targeting the right metric

The foremost step to ensure that the results of your A/B test are true is to select the right metric. Previously, Google Play only allowed you to select how much traffic would be sent to the control and each variant; however, you were not able to specify what would be considered a conversion received from that traffic.

With the new target metric feature, developers now have more control over what metric will be used to determine the experiment result. The two metrics are as follows:

- Retained first-time installers: The number of users who installed the app for the first time and kept it installed for at least 1 day.

- First-time installers: The number of users who installed the app for the first time, regardless of whether they kept it or not.

Google recommends selecting “retained first-time installers,” which makes sense because a high uninstall rate can also directly impact your app’s visibility on Google Play. If users are downloading your app but uninstalling it within a day, it could be because your store listing page doesn’t accurately represent your app or how it can fulfill user expectations. One thing to keep in mind is that selecting “retained first-time installers” will require a bigger sample size to get accurate results.

Expert Tip

If your app has a high level of brand recognition and receives a lot of downloads, we recommend selecting “retained first-time installers.” If you have a newer app that doesn’t receive many downloads, we recommend selecting “first-time installers” so the required sample size for accurate results is smaller and your test does not have to run as long.Confidence level

As mentioned earlier, decreasing the chances of false positives is the main goal behind the new features incorporated by Google Play in store listing experiments. New confidence level features will directly help with that. First, it is important to highlight the difference between confidence level and confidence interval:

- Confidence level: The percentage of times you would expect to get close to the same results if you were to return the test multiple times.

- Confidence interval: How certain you are that a range contains the true result. If you run an A/B test with a 90% confidence interval and your results are a potential decrease of 1.1% in conversion rate and a potential increase of up to 0.3% in conversion rate, you can be 90% confident that the true conversion decrease or uplift would fall within that range.

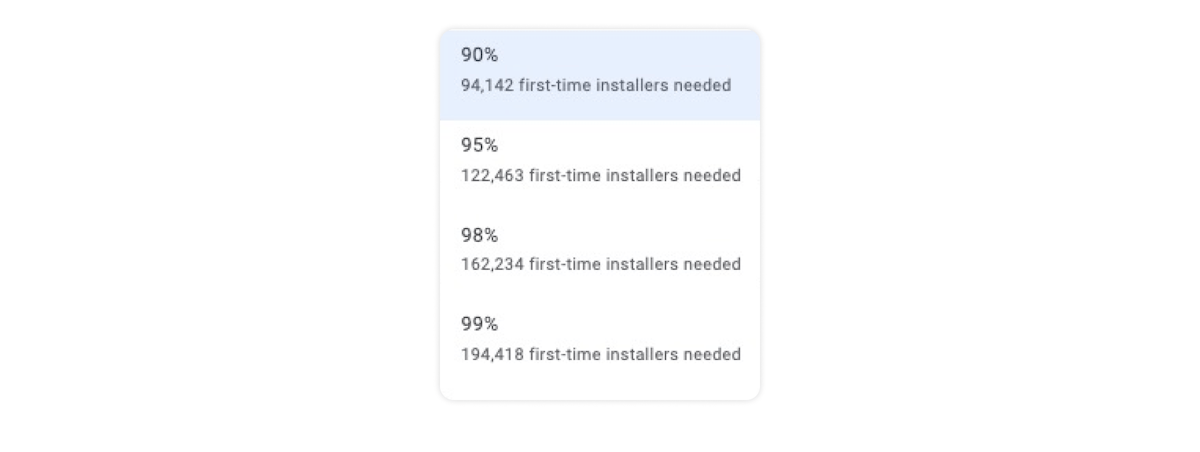

This new tool allows you to adjust the confidence level to decrease the chances of any false positives. You can select a confidence level of 90%, 95%, 98%, or 99%. The higher the confidence level, the more first-time installers are needed.

Google Play confidence level. Source: Google Play Developer Console.

Google Play confidence level. Source: Google Play Developer Console.

The number of first-time installers needed will also depend on the target metric, variants, experiment audience, and the minimum detectable effect you selected. Generally speaking, you want to go with at least a 95% confidence level. If you have a smaller app that doesn’t receive a lot of downloads, selecting the target metric “first-time installers” and only testing one variant will lower the number of first-time installers you need.

Minimum detectable effect in store listing experiments

The minimum detectable effect (MDE) is another new feature that helps lower the number of false positives in A/B tests. Google defines an MDE as “The minimum difference between variants and control required to declare which performs better. If the difference is less than this, your experiment will be considered a draw.”

For example, if you set your MDE to 5% and your control drives a conversion rate of 45%, your variant would need a conversion rate of at least 47.25% to be declared the winner (45% x 1.05% = 47.25%).

You can currently select an MDE between 0.5% and 6%. The smaller the MDE, the bigger the sample size you need to detect the change. Imagine that you are quality-checking pens on a conveyor belt. If you want to prove that 50% of the pens are below standards, what is the least amount of pens you would need to examine before you can draw a conclusion? Probably fewer than if you wanted to prove that only 2% of the pens are of a low quality.

If your app is new and does not receive many installs, we recommend you set your MDE higher, as the sample size required would be smaller. If you have a more mature app and already receive a lot of downloads, we recommend setting a lower MDE for more precise results.

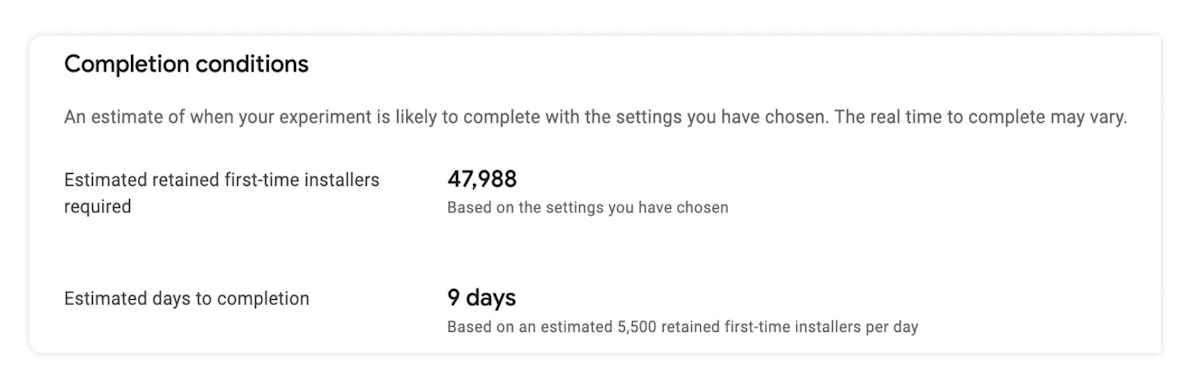

Completion conditions

The last of the new features of Google Play store listing experiments are the completion conditions. Based on the values you set for other settings, this feature will provide an estimate of when your experiment is likely to be completed. With this insight, you can tailor your A/B test to meet your needs, especially if you have a specific timeline for the A/B test.

Google Play completion conditions highlight the estimated completion conditions for an A/B test. Source: Google Play Developer Console.

Google Play completion conditions highlight the estimated completion conditions for an A/B test. Source: Google Play Developer Console.

How to set up a Google Play experiment?

Now that we have highlighted the new features Google Play has incorporated into store listing experiments, let’s find out how you can leverage these new features when setting up your A/B test.

Step 1

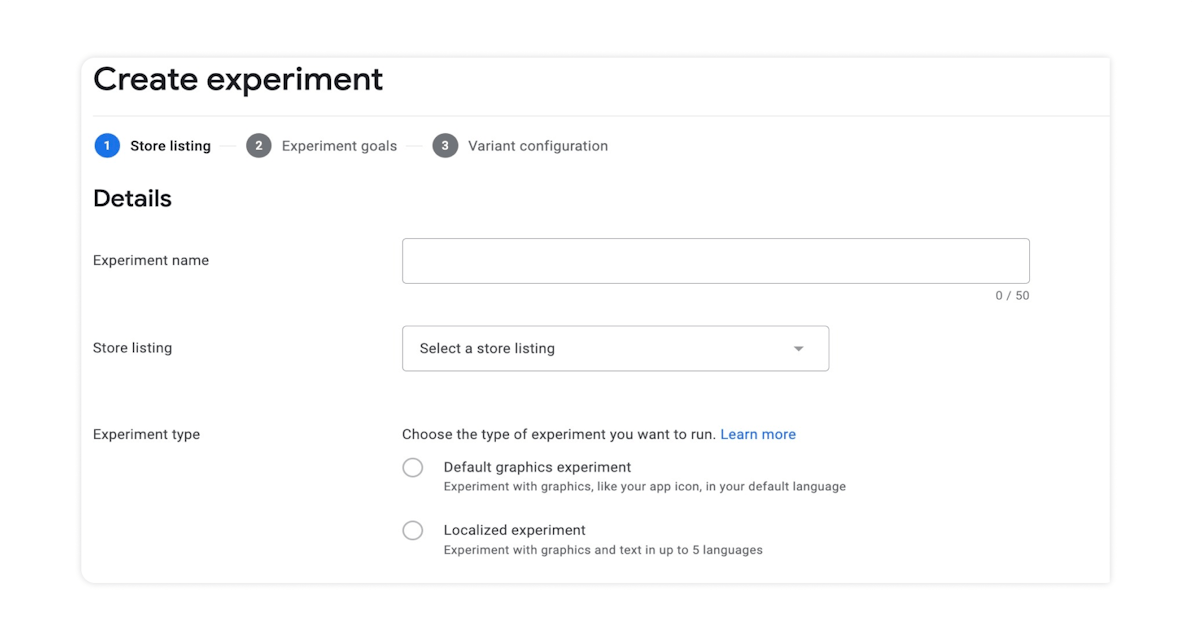

Select “Store listing experiments” under the store presence section. Click on “create experiment” in the top right-hand corner of the page. You’ll find all the previous experiments you have run on this page. Once there, you will be taken to the page shown below.

Source: Google Play Developer Console

Source: Google Play Developer Console

This is where you can name your experiment, choose which store listing you want to run the A/B test for, and select whether you want a localized or global test.

- Localized test: Allows you to test up to 5 languages. Test both creative and metadata elements.

- Global test: Test users worldwide in only one language. Only test creative elements.

We recommend leveraging localized tests. What resonates best with users will differ greatly between languages. A global test will be hard to take away any learnings that can be applied to specific territories. Picking the language of your biggest territories will provide better insights into user behavior in your most important markets.

Step 2

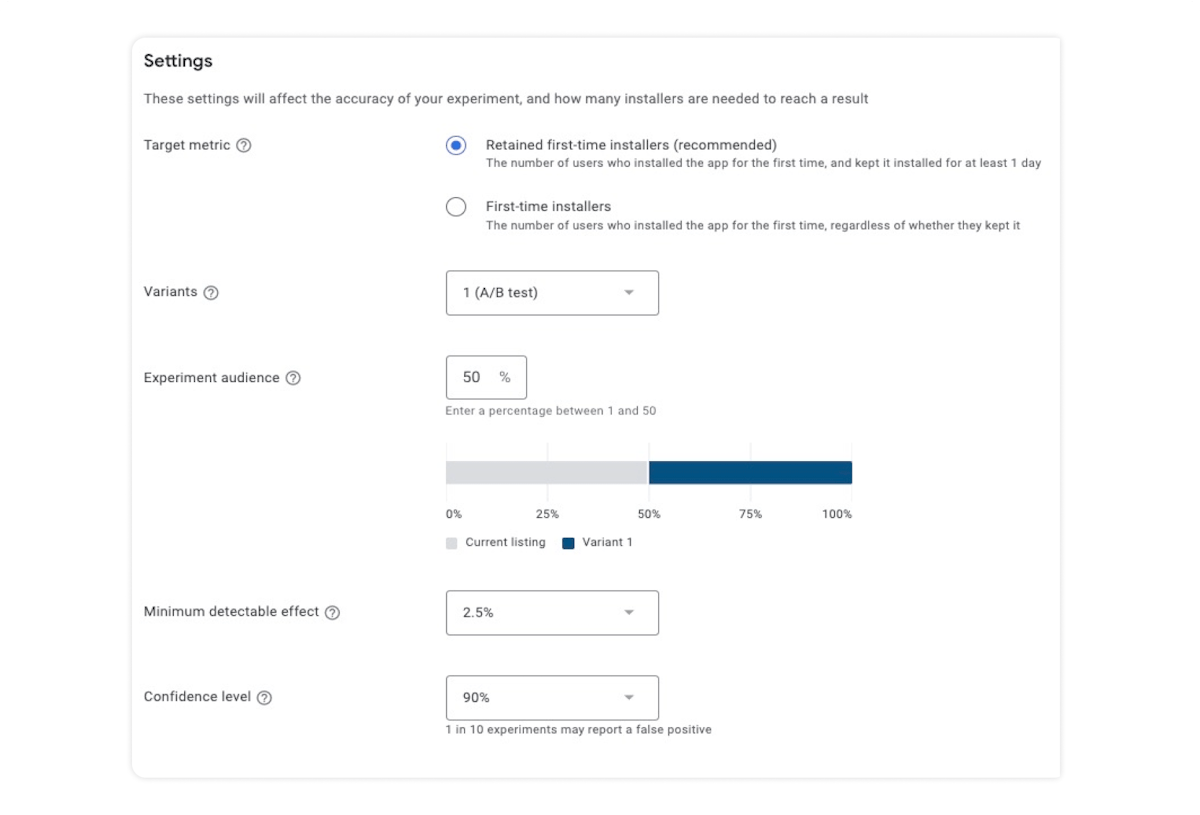

Once the first part is complete, you will be taken to the following page.

Source: Google Play Developer Console

Source: Google Play Developer Console

- “Target metric” is the first setting to fill out. Here is where you can select “retained first-time installers” or “first-time installers.”

- Select how many (up to 3) variants you want to test against your control. The more variants you have, the longer the test will run, as it will take more time to get enough of a sample size to get a true result.

- Next, you can select the percentage of store listing visitors that will see the experimental variant(s) instead of your current listing. The visitors will be split equally across your test variants. We recommend splitting visitors evenly between the current store listing and your variants, as this will speed up the results of the test.

- Select the minimum detectable effect for your test. Remember that the lower your MDE, the longer it will take for the test to get enough of a sample size to declare a true result. If your app doesn’t receive many downloads, we recommend setting your MDE higher than an app already receiving a lot of downloads.

- Finally, select the desired confidence level for your A/B test; a 95% confidence level is generally preferred. If the first-time installers required for a 95% confidence level is too high for your app, you can change your target metric to “first-time installers” and test only one variant to decrease the number of first-time installers you need.

Note: Once all the steps are complete, the completion conditions section will estimate when your experiment is likely to finish. This information will help you further edit the settings of your A/B test if the required first-time installers or estimated test duration are too high/long.

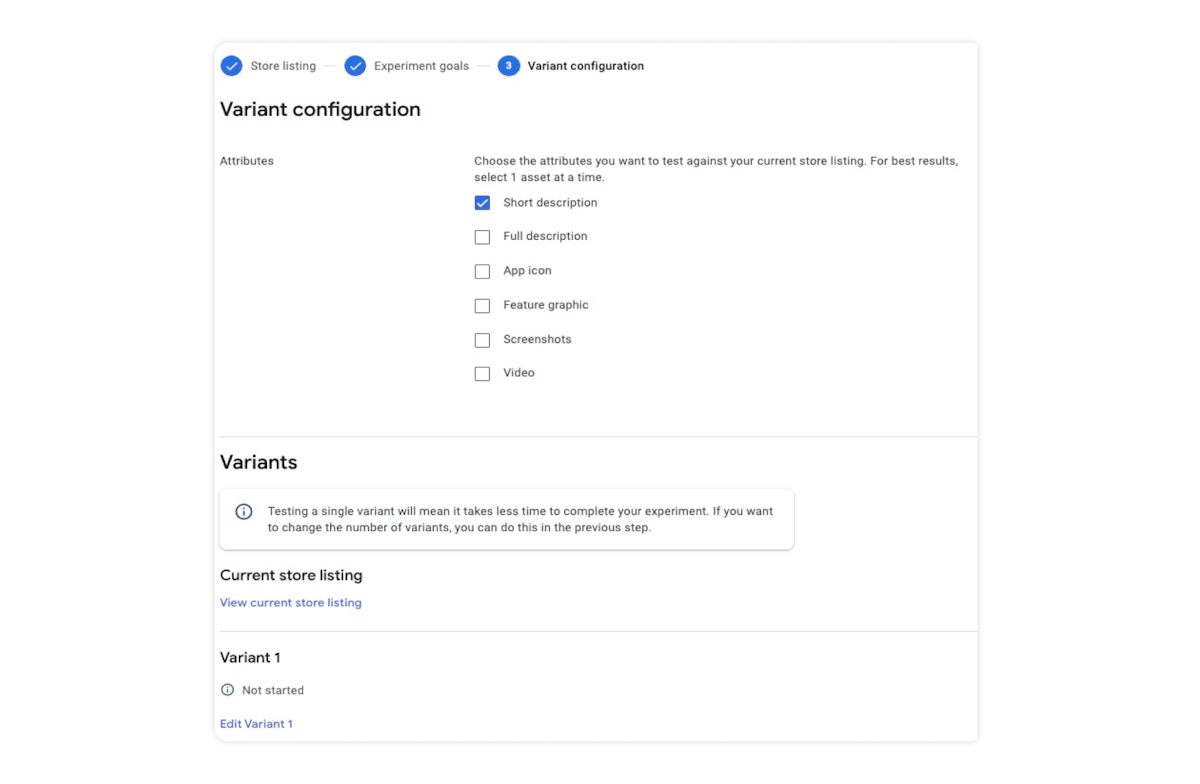

Step 3

The last step is where you can select what you want to test (remember to test one element at a time). Once you have selected what you want to test, you can upload up to 3 metadata or creative variants. After this, you can select “Start experiment” and track the results as they come in!

Source: Google Play Developer Console

Source: Google Play Developer Console

Conclusion

Understanding the creative and metadata elements that best resonate with your users is crucial to increase your app’s conversion rate. That’s why we recommend that every app leaverages Google Play store listing experiments. Doing so will allow you to gain insights into your specific user base which can be applied to your store listing page and increase your installs, conversion rate, and retention rate. New features implemented by Google give you even more power and flexibility to adjust store listing experiments to meet your A/B testing needs!

Ready to take App Store Optimization to the next level? Sign up for a 7-day free trial and unlock unique insights to create your ASO strategy.

Oriane Ineza

Oriane Ineza

Alexandra De Clerck

Alexandra De Clerck

Simon Thillay

Simon Thillay